I have three public-facing sites which I’d rather not have to manage with WordPress any more. None of them do anything fancy. They’re just a collection of pages and posts. I span them up and started adding content in the days where WordPress was all the rage. Recently, I’ve been looking at ditching the WordPress hosting burden and converting them to simple, static websites. This will save me time and money in the long run.

The Epic

To convert three WordPress sites to Hugo, with CI/CD and monitoring configured.

As there are only three non-critical sites involved, there is no need to automate the migration process.

The Design Decisions

The first decision to make was what I would use to generate the static site content. A quick review of the current static site generator tooling landscape led me to the first architectural design decision. Hugo was to be the replacement. Largely for these reasons.

The second task was to determine how and where would I host the content. We’re not talking ‘mission critical’ here, and at some point I’d like to try hosting it all on IPFS, but I still have a bit more research to do on that front, which could be a rabbit hole I don’t want to go down just yet. Next best would just be some cheap object storage and a cheap CDN in front of it, maybe something like Backblaze and CloudFlare, which would be fairly simple and straightforward to set up. However, I’m currently working towards an AWS qualification, so if I lean towards an AWS-based solution it would help me to become more familiar and comfortable with the best practices for using AWS CloudFront in conjunction with S3 for when it comes up in a test. It should be a solid, low-maintenance solution for now, and trivial to move to another platform any time I choose.

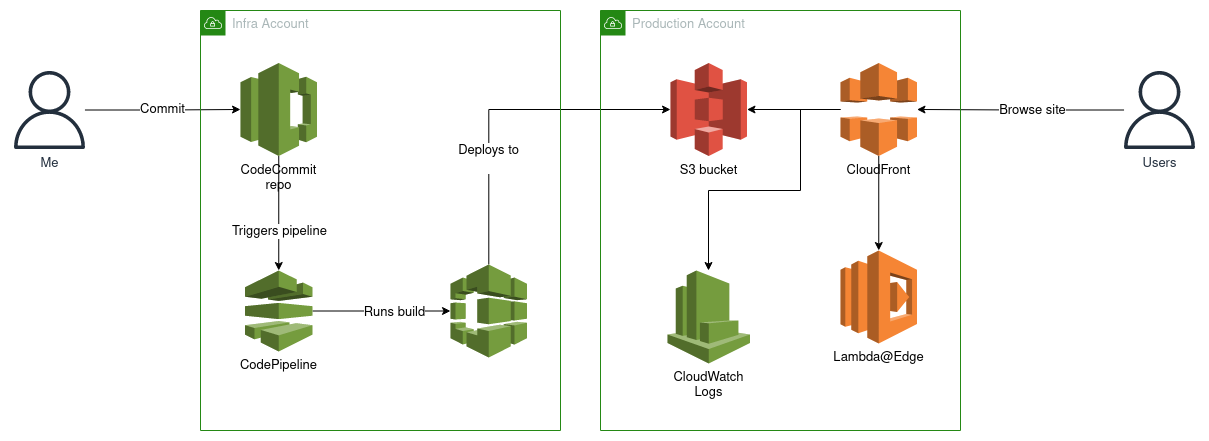

Another major architectural design decision to be made was the best CI/CD system to automate the content generation and deployment work, and any other chores. In this case, I opted for CodeCommit for the git repo, with CodePipeline to orchestrate the build and deploy, and CodeBuild to manage the build. The reason again to gain more exposure to AWS tools and practices. Pushes to ‘master’ branch should trigger production deployments. Production deployments should trigger success or error notifications to a Discord channel. All this machinery can be tracked and managed in Terraform.

Monitoring and alerting of site uptime and downtime and third-party latency will be handled by UpDown.io who I’ve been using for years now and am quite happy with. I’ll consider this the source of the main uptime SLA, and will write a scheduled script (for which another design decision will need to be made) which will gather the key metrics from UpDown’s API and feed them into our metrics pooling system (which will most likely be Prometheus-based), as UpDown only provide a limited history.

Logging to CloudWatch logs with a 90 day retention, and rollover to S3.

Eventually, traffic analysis to be managed by Plausible.io.

A rough sketch of how that looks in my head:

Running Hugo

To get started, I installed the latest binary.

curl -sSL -o /tmp/hugo.deb https://github.com/gohugoio/hugo/releases/download/v0.94.2/hugo_0.94.2_Linux-64bit.deb

sudo dpkg -i /tmp/hugo.deb

You can find a download link for the latest version on the GitHub releases page.

Check it has installed as expected and is ready to use:

hugo version

Preparing a site

First, we need to prepare a directory structure as per Hugo’s requirements.

hugo new site testsite

This will create a testsite folder with the initial ‘scaffold’ directory structure in which you can start to add content and customise the defaults.

At this point the site is unconfigured and there is no theme or layout. We need to configure the site. I’m not a TOML fan (too many MLs!) so I ditched the generated config.toml and made my own config.yaml:

baseURL: "https://mysite.tld/"

languageCode: "en-gb"

title: "My Test Hugo Site"

theme: "mytheme"

Themes

You may have noticed that mytheme has not been created yet.

Hugo is quite popular, so there are tons of themes out there you can work with. I took the smallest, simplest one I could find and used that as a basis for my own theme. I simply copied the Hugo theme it place as themes/mytheme.

To see what you’re working with, run Hugo’s local server:

hugo server -D

In the output will be a localhost URL that you can use to view the site in development.

Now, develop the theme to your own requirements. The site will automatically refresh in the browser as you save changes in your working directory.

By the end of the process I had replaced the vast majority of the existing theme code with code I had copied in and adapted from the HTML and CSS of the WordPress theme from the original site. It was starting to look and feel like the existing site.

Static assets

Static assets are placed in the static folder.

My styling needs are very simple. Other than the basic CSS file, I also used the very useful favicon.io site to create a set of static icon files for the site.

Source control

As soon as I had something tangible, I started a git repository and began committing small incremental changes as I was constructing the theme and managing the content.

Once things started to take shape, I created a new CodeCommit repository in my AWS ‘Infra’ account, using Terraform. I pushed the initial commit and received notification of the commit on Discord, thanks to a Lambda integration I’ll blog about another time.

Migrating existing content from WordPress

This wasn’t too difficult, thanks to this excellent script.

I logged onto the WordPress admin area and exported the site as an XML dump file. I then ran this script using the XML dump file as input. As output, it created a folder structure containing the posts and their images, ready to be moved into the content/posts folder of my Hugo working directory.

I still had to go through each post and manually tidy up the Markdown and add some extra fields to the front matter for each page. Luckily, I’m not a very active blogger so there were only a couple of dozen pages to go through.

Continuous Deployment

Eventually I reached a point where it was working well enough locally that I was ready to make an initial attempt at setting up the deployment workflow.

But first, I need to set up the destination for the deployment.

S3 Bucket

So, I started by creating an ‘Origin Access Identity’ in CloudFront, to represent the entity that will be making REST calls to the bucket, so I can set my S3 policy up correctly.

Next, I created a new S3 bucket in my AWS ‘Production’ account, with ‘deny all public access’ settings, and applied a simple bucket policy to allow CodePipeline to deploy to it and CloudFront to read from it.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::111111111111:role/service-role/AWSCodePipelineServiceRole-ap-southeast-1-hugo-homepage"

},

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource": [

"arn:aws:s3:::homepage-222222222222/*"

]

},

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::cloudfront:user/CloudFront Origin Access Identity EFHBOWLQABCDE"

},

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::homepage-222222222222/*"

]

}

]

}

I used aws s3 sync to sync a copy of my local development public folder to the bucket so I could test things while setting it up.

CloudFront

S3 websites are good for some things, but they have limits. Unless I’m wrong, you can’t configure an S3 bucket with your own SSL certificate, and so can’t use it directly to host the website for one of your custom domains. As far as I know, that is still the case. To achieve this with AWS, you need CloudFront to handle the public-facing service requests.

If you connect CloudFront to the S3 bucket’s website endpoint you can take advantage of S3’s configurability to return the index.html object for a directory request. However, that requires that objects in the bucket be publicly available. That may not be desirable in some cases. I prefer to disable public access to all my S3 buckets.

That means we must configure CloudFront with an S3 origin, as opposed to an HTTP one. This is best practice, and allows us to secure the bucket with a policy that only allows CodePipeline to deploy new content and CloudFront to read objects from the bucket to fulfil requests. The CloudFront Origin Access Identity is used in the policy to identify the CloudFront service.

However, index.html is broken as CloudFront is fetching objects via the REST API, not via HTTP. In CloudFront you can specify a default root object to be returned in place of a request for the / root URI. But that doesn’t work for subfolders, so isn’t a solution in this case.

Lambda@Edge

Basically, it turns out the solution for this is to use a Lambda@Edge function. I found this more compact version of the script.

CodePipeline

Next, I added a simple buildspec.yaml file to my repo.

version: 0.2

phases:

install:

runtime-versions:

python: 3.8

commands:

- apt-get update

- echo Installing hugo

- curl -L -o hugo.deb https://github.com/gohugoio/hugo/releases/download/v0.94.2/hugo_0.94.2_Linux-64bit.deb

- dpkg -i hugo.deb

- rm hugo.deb

build:

commands:

- hugo -v

artifacts:

files:

- '**/*'

base-directory: public

Then I constructed the CodePipeline manually to figure things out. Once it was basically working I created some Terraform and imported it into state. The TF looks a bit like this:

resource "aws_codepipeline" "codepipeline" {

name = var.name

role_arn = var.role_arn

artifact_store {

location = var.s3_artifact_store

type = "S3"

}

stage {

name = "Source"

action {

name = "Source"

namespace = "SourceVariables"

category = "Source"

owner = "AWS"

provider = "CodeCommit"

version = "1"

output_artifacts = [

"SourceArtifact"

]

configuration = {

BranchName = "master"

OutputArtifactFormat = "CODE_ZIP"

PollForSourceChanges = "false"

RepositoryName = "hugo-homepage"

}

}

}

stage {

name = "Build"

action {

name = "Build"

namespace = "BuildVariables"

category = "Build"

owner = "AWS"

provider = "CodeBuild"

input_artifacts = [

"SourceArtifact"

]

output_artifacts = [

"BuildArtifact"

]

version = "1"

configuration = {

ProjectName = "hugo-homepage"

}

}

}

stage {

name = "Deploy"

action {

name = "Deploy"

namespace = "DeployVariables"

category = "Deploy"

owner = "AWS"

provider = "S3"

input_artifacts = [

"BuildArtifact"

]

version = "1"

configuration = {

BucketName = "homepage-222222222222"

CannedACL = "bucket-owner-full-control"

Extract = "true"

}

}

action {

category = "Invoke"

configuration = {

"FunctionName" = "cloudfront-invalidation"

}

input_artifacts = []

name = "cloudfront-invalidation"

output_artifacts = []

owner = "AWS"

provider = "Lambda"

region = "ap-southeast-1"

run_order = 1

version = "1"

}

}

}

Summary

We’ve created a Hugo site and theme, imported the old content and checked it into a git repository. We’ve also created a deployment pipeline to automatically publish the site to an S3 bucket.

[Edit] There are a few steps missing here as this article was written a few years ago and I didn’t get round to finishing it off at the time. I’ve decided to summarise it and post it now anyway as it may still contain information useful to some people. I still use Hugo for these sites, but I’ve since moved away from using AWS so I no longer have the necessary configs to fill in the gaps. Please feel free to contact me if you have any specific questions.